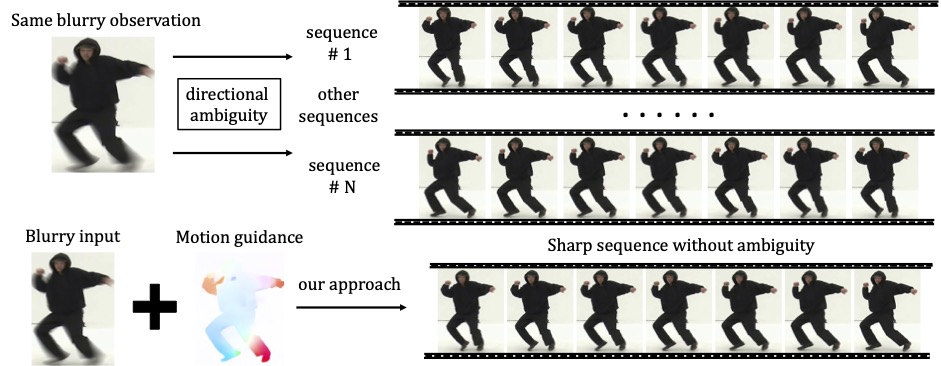

Directional Ambiguity

Blur decomposition from a single blurry image faces the fundamental problem of directional ambiguity. Each independent and uniform motion blurred region can correspond to either a forward or a backward motion sequence, resulting in an exponential increase in the number of potential solutions for the image. However, existing methods for blur decomposition are designed to predict a single solution among them. This directional ambiguity brings instability to the training process and leads to poorly diversified and low-quality results.