Rolling Shutter Correction Ambiguity

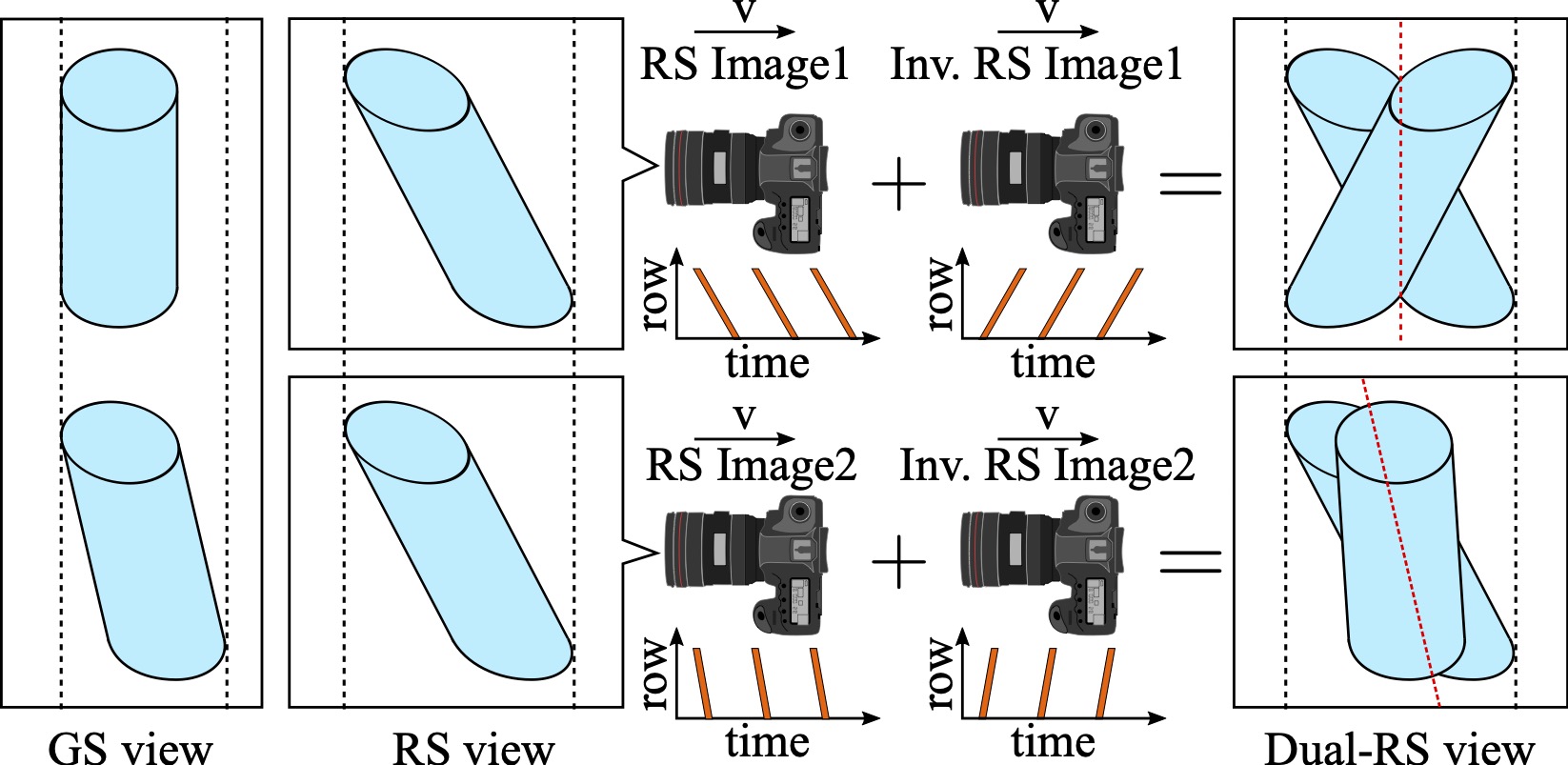

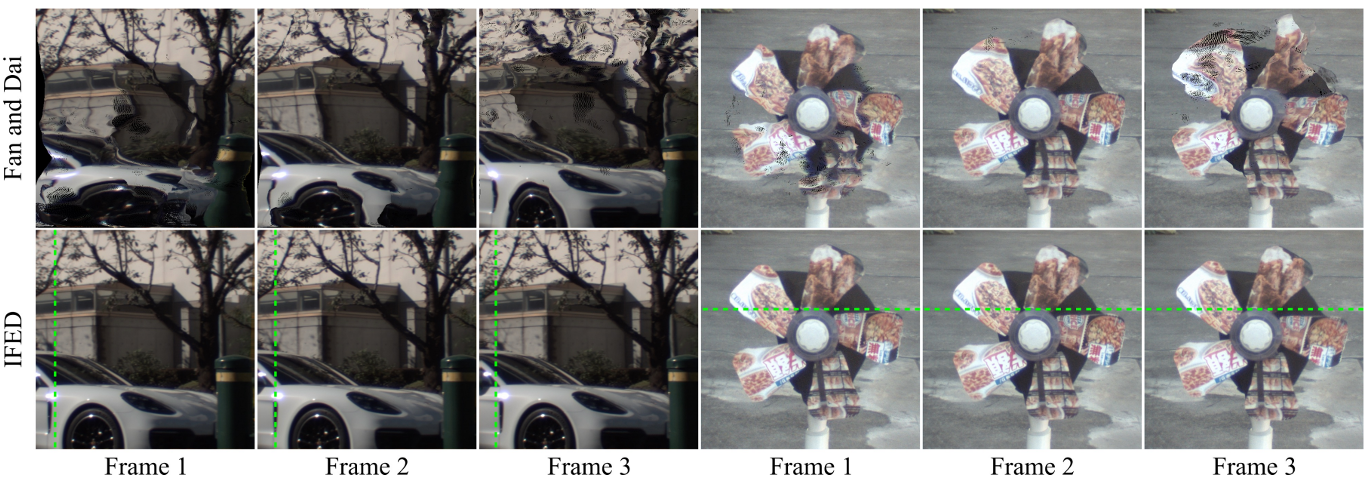

Correction ambiguity of consecutive frames setup: Suppose there are two similar cylinders, one of them is tilted, as shown in GS view. Then, two RS cameras moving horizontally at the same speed but with different readout time setups can produce the same RS view. Models do not know how much correction is correct facing data beyond their training dataset.

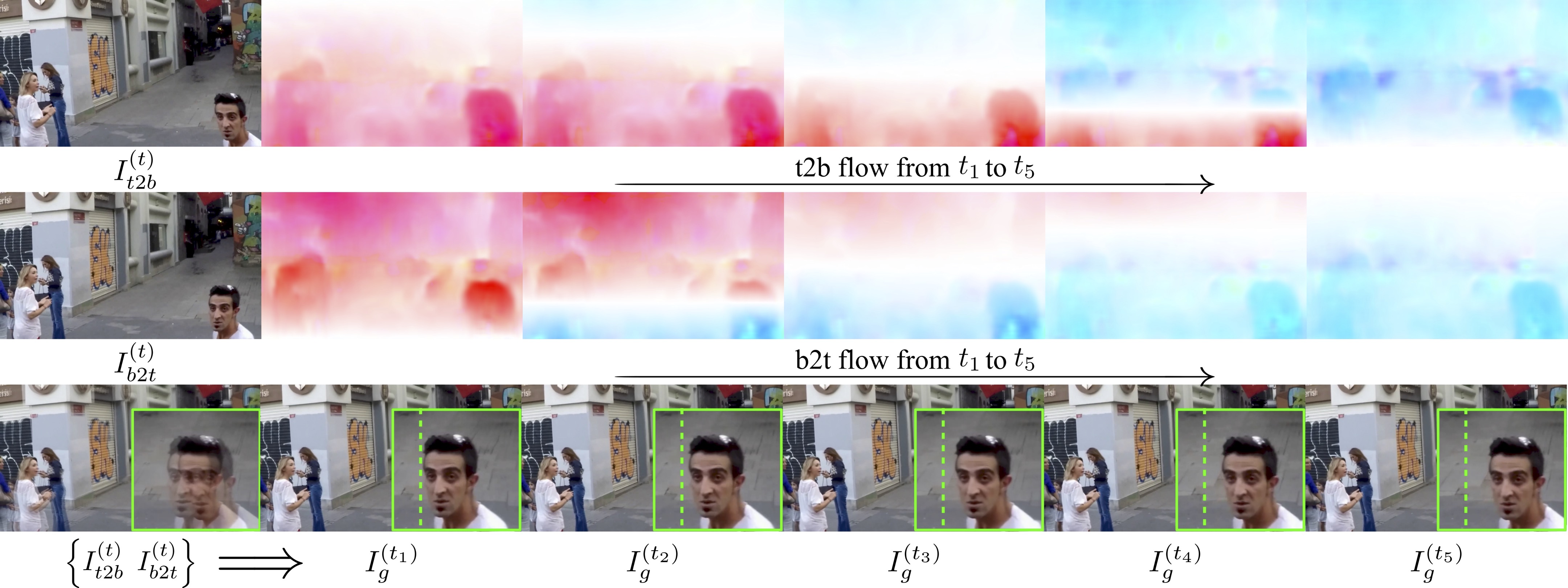

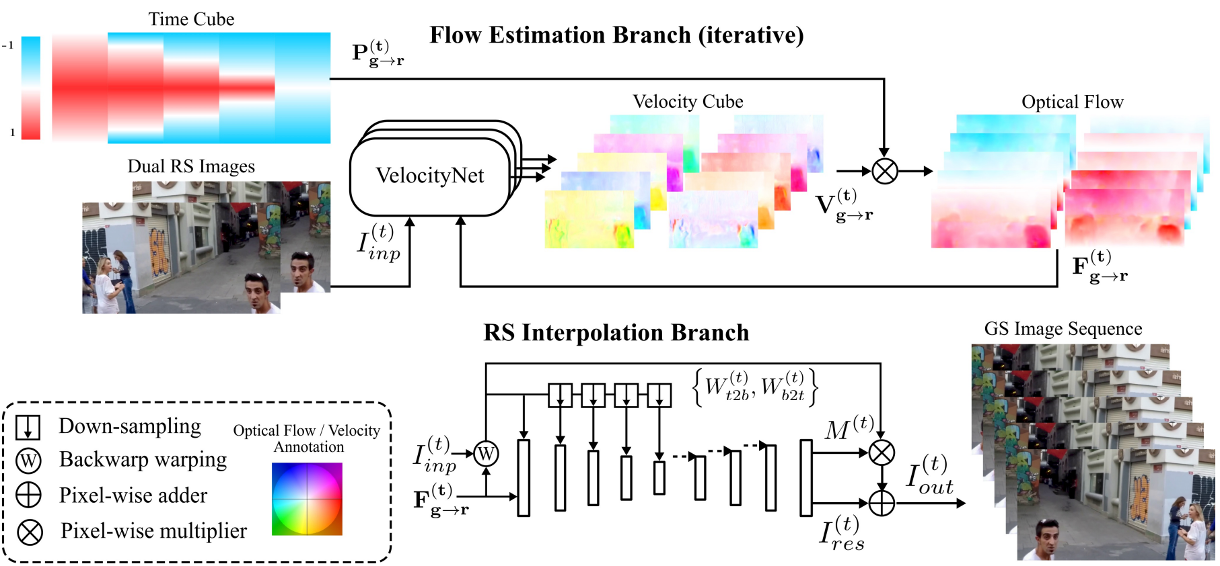

Instead of two consecutive frames, we introduce another constraint setting that utilizes intra-frame spatial constraints of dual images taken simultaneously but with reversed distortion. Dual-RS setup can avoid ambiguity because the correct correction pose can be estimated based on the symmetry